AI for Tax Administrations: Governance and Innovation

Blog Author: Paul Dommel, Partner, Federal Tax and Finance, IBM

These events brought together senior tax administrators from US IRS and other tax agencies that are confronting issues related to AI and the Modern Tax Agency. Participants from government, industry and academia discussed opportunities and challenges of using artificial intelligence in tax and revenue agency functions.

In prior blogs, we covered key points on using “AI to improve the taxpayer experience” and reported on results of design thinking sessions on “Streamlining Tax Operations with AI.” At every point in the conversation, gathered stakeholders emphasized the importance of effective governance and risk management. This blog focuses on governance and how tax agencies can effectively manage risk while also encouraging important innovation.

Leaders recognize that AI can help address challenges common to tax agencies across the world with digital assistants, processing paper returns more effectively, and improving fraud detection.

Risk and Governance

While appreciating the opportunities, tax agencies understand that using newer, Generative AI capabilities means adopting appropriate governance and risk mitigation strategies. In particular, generative AI that utilizes existing information to create new content comes with wide-ranging efficiencies and some risk. Tax agencies are keenly aware of the potential for bias and unexplainable analytics. Moreover, assuring taxpayers that their data is secure is key to establishing the necessary trust agencies need for successful AI deployment.

Tax agencies want to make sure that technology delivers benefits and produces results that are transparent, unbiased, and appropriate. Roundtable participants identified key questions that AI programs and governance programs should consider on an ongoing basis to protect taxpayers and ensure efficiency goals are not at the expense of quality or fairness to taxpayers. These include:

How was the AI trained?

- Has the model been exposed to robust data?

- Has the model been exposed to a large quantity and variety of data?

- How is the quality of the model architecture?

Is it aligned with a useful outcome / intended use case?

- Should we do it?

- Can the model be fine-tuned for different use cases?

- How can the model be updated or extended?

Does it meet agency / national policies and laws?

- How do models and their usage satisfy privacy and government regulations?

- Who is responsible for exposed information or a “wrong answer”?

How does an agency work to detect and mitigate AI bias and drift?

- How does the model detect and correct bias?

- Does the generative AI track drift over time?

- Is there human oversight of models?

Is it safe?

- Who has control over the generative AI model, input data, and output data?

- How to ensure that confidential information is not exposed?

- What safety features and guardrails are in place?

Does it produce trusted outcomes?

- Can the model be audited?

- Can the outputs be explained and traced?

- How can drift and hallucinations be prevented?

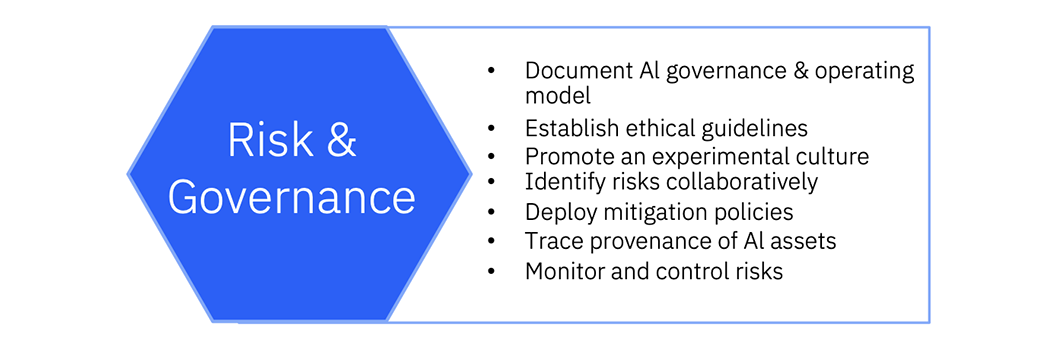

The graphic below illustrates a few key points around Risk Management and Governance that emerged during the roundtables.

Effective AI governance includes embracing transparency, ensuring fairness and privacy, and fostering accountability—an approach that demands ongoing attention to ethics and governance. Human-centered AI development is critical to controlling AI risks. As governments increasingly use AI and other new technologies, they must focus on ethical governance, human-centered design, and collaborative innovation.

Innovation and Governance

Within a governance framework, fostering a culture of innovation is critical. Roundtable and design thinking participants wanted to leverage AI to prioritize innovative thinking required for agencies to modernize, improve service, and collect the right amount of tax. At the same time, tax agencies must also work to develop appropriate guardrails.

The discussions surfaced several ideas, but it’s an important topic for additional thought. Governance programs, which may often be federated, can be used as a forum to share best practices and lessons learned across the agency. Well executed governance programs provide opportunities to share workable, high-value opportunities across the organization. Concepts included creating a Center of Excellence to support business units with both technology insights and leading ideas on transparency, ethical use, and governance processes.

Supporting innovation while driving ethical, unbiased, and transparent AI is of paramount importance. Beyond the tax roundtables, the IBM Center for the Business of Government and the Partnership for Public Service hosted an event on Ethical and Effective AI. That discussion laid out approaches to maintain a culture of innovation while delivering on ethical AI. Approaches include a heavy focus on AI Literacy, collaborating to develop agency-specific AI strategies, and using hack-a-thons to exchange information. Phaedra Boinodiris, IBM’s Global Leader for Trustworthy AI discussed this in a blog: Artificial Intelligence Development for Effective Agencies: Strategies for Ethical and Effective Implementation. This is an important area that we will continue to research.